Statistics Discussion

Copyright © October 2004 Ted Nissen

TABLE OF

CONTENTS

2 Review and More Introduction

3 Central Values and the Organization of Data

8 Theoretical Distributions

Including the Normal Distribution

9 Samples and Sampling Distributions

11 The t Distribution and the

t-Test

12 Analysis of Variance: One-Way

Classification

13 Analysis of Variance: Factorial

Design

14 The Chi Square Distribution

16 Vista Formulas and Analysis

1 Introduction

1.1

1.2 Statistics Definition

1.2.1 Algebra and Statistics

1.2.1.1

Algebra

is a generalization of arithmetic in which letters representing numbers are

combined according to the rules of arithmetic

1.2.1.2

The

product of an algebraic expression, which combines several scores, is a

statistic.[1]

1.2.2 Descriptive Statistic

1.2.2.1

1.2.3 Inferential Statistics

1.2.3.1

1.3 Purpose of Statistics

1.3.1

1.4 Terminology

1.4.1

1.4.2 Populations, Samples, and

Subsamples

1.4.2.1

A

population consists of all members of some specified group. Actually, in

statistics, a population consists of the measurements on the members and not

the members themselves. A sample is a subset of a population. A subsample is a

subset of a sample. A population is arbitrarily defined by the investigator and

includes all relevant cases.

1.4.2.2

Investigators

are always interested in some population. Populations are often so large that

not all the members can be measured. The investigator must often resort to

measuring a sample that is small enough to be manageable but still

representative of the population.

1.4.2.3

Samples

are often divided into subsamples and relationships among the subsamples

determined. The investigator would then look for similarities or differences

among the subsamples.

1.4.2.4

Resorting

to the use of samples and subsamples introduces some uncertainty into the

conclusions because different samples from the same population nearly always

differ from one another in some respects. Inferential statistics are used to

determine whether or not such differences should be attributed to chance.

1.4.3 Parameters and Statistics

1.4.3.1

A

parameter is some numerical characteristic of a population. A statistic is some

numerical characteristic of a sample or subsample. A parameter is constant; it

does not change unless the population itself changes. There is only one number

that is the mean of the population; however, it often cannot be computed,

because the population is too large to be measured. Statistics are used as

estimates of parameters, although, as we suggested above, a statistic tends to

differ from one sample to another. If you have five samples from the same

population, you will probably have five different sample means. Remember that

parameters are constant; statistics are variable.

1.4.4 Variables

1.4.4.1

A

variable is something that exists in more than one amount or in more than one

form. Memory is a variable. The Wechsler Memory Scale is used to measure

people’s memory ability, and variation is found among the memory scores of any

group of people. The essence of measurement is the assignment of numbers on the

basis of variation.

1.4.4.2

Most

variables can be classified as quantitative variables. When a quantitative

variable is measured, the scores tell you something about the amount or degree

of the variable. At the very least, a larger score indicates more of the

variable than a smaller score does.

1.4.4.3

A

score has a range consisting of an upper limit and lower limit, which defines

the range. For example, 103=102.5-103.5, the numbers 102.5 and 103.5 are called

the lower limit and the upper limit of the score. The idea is that a score can

take any fractional value between 102.5 and 103.5, but all scores in that range

are rounded off to 103.

1.4.4.4

Some

variables are qualitative variables. With such variables, the scores (number)

are simple used as names; they do not have quantitative meaning. For example,

political affiliation is a qualitative variable.

1.5 Scales of Measurement

1.5.1 Introduction

1.5.1.1

Numbers

mean different things in different situations. Numbers are assigned to objects

according to rules. You need to distinguish clearly between the thing you are

interested in and the number that symbol9izes or stands for the thing. For

example, you have had lots of experience with the numbers 2 and 4. You can

state immediately that 4 is twice as much as 2. That statement is correct if

you are dealing with numbers themselves, but it may or may not be true when

those numbers are symbols for things. The statement is true if the numbers

refer to apples; four apples are twice as many as two apples. The statement is

not true if the numbers refer to the order that runners finish in a race.

Fourth place is not twice anything in relation to second place-not twice as

slow or twice as far behind the first-place runner. The point is that the

numbers 2 and 4 are used to refer to both apples and finish places in a race,

but the numbers mean different things in those two situations.

1.5.1.2

S.

S. Stevens (1946)[2] identified

four different measurement scales that help distinguish different kings of

situation in which numbers are assigned to objects. The four scales are;

nominal, ordinal, interval, and ratio.

1.5.2 Nominal Scale

1.5.2.1

Numbers

are used simply as names and have no real quantitative value. It is the scale

used for qualitative variables. Numerals on sports uniforms are an example;

here, 45 is different from 32, but that is about all we can say. The person

represented by 45 is not “more than” the person represented by 32, and

certainly it would be meaningless to try to add 45 and 32. Designating

different colors, different sexes, or different political parties by numbers

will produce nominal scales. With a nominal scale, you can even reassign the

numbers and still maintain the original meaning, which as only that the

numbered things differ. All things that are alike must have the same number.

1.5.3 Ordinal Scale

1.5.3.1

An

ordinal scale, has the characteristic of the nominal scale (different numbers

mean different things) plus the characteristic of indicating “greater than” or

“less than”. In the ordinal scale, the object with the number 3 has less or

more of something than the object with the number 5. Finish places in a race

are an example of an ordinal scale. The runners finish in rank order, with “1”

assigned to the winner, “2” to the runner-up, and so on. Here, 1 means less

time than 2. Other examples of ordinal scales are house number, Government

Service ranks like GS-5 and GS-7, and statements like “She is a better

mathematician than he is.”

1.5.4 Interval Scale

1.5.4.1

The

interval scale has properties of both the ordinal and nominal scales, plus the

additional property that intervals between the numbers are equal. “Equal

interval” means that the distance between the things represented by ”2” and “3” is the same as the distance

between the things represented by “3” and “4”. The centigrade thermometer is

based on an interval scale. The difference is temperature between 10° and 20°

is the same as the difference between 40° and 50°. The centigrade thermometer,

like all interval scales, has an arbitrary zero point. On the centigrade, this

zero point is the freezing point of water at sea level. Zero degrees on this

scale does not mean the complete absence of heat; it is simply a convenient

starting point. With interval data, we have one restriction; we may not make

simple ratio statements. We may not say that 100° is twice as hot as 50° or

that a person with an IQ of 60 is half as intelligent as a person with an IQ of

120.

1.5.5 Ratio Scale

1.5.5.1

The

fourth kind of scale, the ratio scale, has all the characteristics of the

nominal, ordinal, interval scales, plus one: it has a true zero point, which

indicates a complete absence of the thing measured. On a ratio scale, zero

means “none”. Height, weight, and time are measured with ratio scales. Zero

height, zero weight, and zero time mean thaqt no amount of these variables is

present. With a true zero point, you can make ratio statements like “16

kilograms is four times heavier than 4 kilograms.”

1.5.6 Conclusion

1.5.6.1

Having

illustrated with examples the distinctions among these four scales-it is

sometimes difficult to classify the variables used in the social and behavioural

sciences. Very often they appear to fall between the ordinal and interval

scales. It may happen that a score provides more information than simply rank,

but equal intervals cannot be proved. Intelligence test scores are an example.

In such cases, researchers generally treat the data as if they were based on an

interval scale.

1.5.6.2

The

main reason why this section on scales of measurement is important is that the

kind of descriptive statistics you can compute on your numbers depends to some

extent upon the kind of scale of measurement the numbers represent. For

example, it is not meaningful to compute a mean on nominal data such as the

numbers on football players’ jerseys. If the quarterback’s number is 12 and a

running back’s number is 23, the mean of the two numbers (17.5) has no meaning

at all.

1.6 Statistics and Experimental

Design

1.6.1 Introduction

1.6.1.1

Statistics

involves the manipulation of numbers and the conclusions based on those

manipulations. Experimental design deals with how to get the numbers in the first

place.

1.6.2 Independent and Dependent

Variables

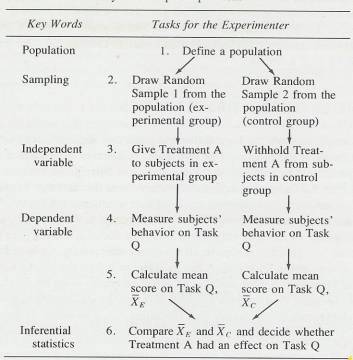

1.6.2.1

In

the design of a typical simple experiment, the experimenter is interested in

the effect that one variable (called the independent variable) has on some

other variable (called the dependent variable). Much research is designed to

discover cause-and-effect relationships. In such research, differences in the

independent variable are the presumed cause for differences in the dependent

variable. The experimenter chooses values for the independent variable, administers

a different value of the independent variable to each group of subjects, and

then measures the dependent variable for each subject. If the scores on the

dependent variable differ as a result of differences in the independent

variable, the experimenter may be able to conclude that there is a

cause-and-effect relationship.

1.6.3 Extraneous (Confounding)

Variables

1.6.3.1

One

of the problems with drawing cause-and-effect conclusions is that you must be

sure that changes in the scores on the dependent variable are the result of

changes in the independent variable and not the result of changes in some other

variables. Variables other than the independent variable that can cause changes

in the dependent variable are called extraneous variables.

1.6.3.2

It

is important, then, that experimenters be aware of and control extraneous

variables that might influence their results. The simplest way to control an

extraneous variable is to be sure all subjects are equal on that variable.

1.6.3.3

Independent

variables are often referred to as treatments because the experimenter

frequently asks “If I treat this group of subjects this way and treat another

group another way, will there be a difference in their behaviour?” The ways

that the subjects are treated constitute the levels of the independent variable

being studied, and experiments typically have two or more levels.

1.7 Brief History of Statistics

1.7.1

2

Review

and More Introduction

2.1 Review of Fundamentals

2.1.1 This section is designed to

provide you with a quick review of the rules of arithmetic and simple algebra.

We recommend that you work the problems as you come to them, keeping the

answers covered while you work. We assume that you once knew all these rules

and procedures but that you need to refresh your memory. Thus, we do not

include much explanation. For a textbook that does include basic explanations,

see Helen M. Walker.[3]

2.1.2 Definitions

2.1.2.1 Sum

2.1.2.1.1 The answer to an addition problem is called a sum. In Chapter 12, you will calculate a sum of squares, a quantity that is obtained by adding together some squared numbers.

2.1.2.2 Difference

2.1.2.2.1 The answer to a subtraction problem is called a difference. Much of what you will learn in statistics deals with differences and the extent to which they are significant. In Chapter 10, you will encounter a statistic called the standard error of a difference. Obviously, this statistic involves subtraction.

2.1.2.3

Product

2.1.2.3.1 The answer to a multiplication problem is called a product. Chapter 7 is about the product-moment correlation coefficient, which requires multiplication. Multiplication problems are indicated either by an x or by parentheses. Thus, 6 x 4 and (6)(4) call for the same operation.

2.1.2.4 Quotient

2.1.2.4.1 The

answer to a division problem is called a quotient. The IQ or intelligence quotient is based on the

division of two numbers. The two ways to indicate a division problem are  and —. Thus, 9

and —. Thus, 9  4 and 9/4 call for

the same operation. It is a good idea to

think of any common fraction as a division problem. The numerator is to be

divided by the denominator.

4 and 9/4 call for

the same operation. It is a good idea to

think of any common fraction as a division problem. The numerator is to be

divided by the denominator.

2.1.3 Decimals

2.1.3.1 Addition and Subtraction of Decimals.

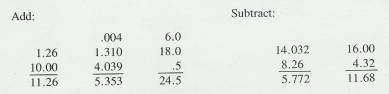

2.1.3.1.1 There is only one rule about the addition and subtraction of numbers that have decimals: keep .the decimal points in a vertical line. The decimal point in the answer goes directly below those in the problem. This rule is illustrated in the five problems below.

2.1.3.1.2 Example #1

2.1.3.1.2.1

2.1.3.2 Multiplication of Decimals

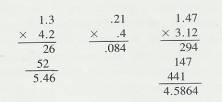

2.1.3.2.1 The basic rule for multiplying decimals is that the number of decimal places in the answer is found by adding up the number of decimal places in the two numbers that are being multiplied. To place the decimal point in the product, count from the right.

2.1.3.2.2 Example #2

2.1.3.2.2.1

2.1.3.3 Division of Decimals

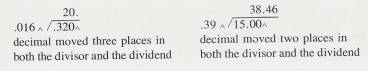

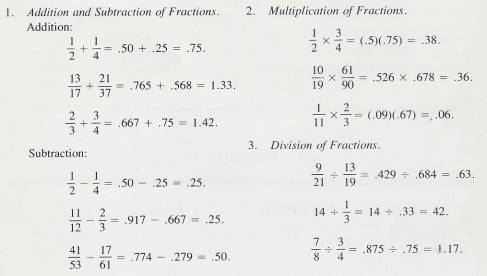

2.1.3.3.1 Two methods have been used to teach division of decimals. The older method required the student to move the decimal in the divisor (the number you are dividing by) enough places to the right to make the divisor a whole number. The decimal in the dividend was then moved to the right the same number of places, and division was carried out in the usual way. The new decimal places were identified with carets, and the decimal place in the quotient was just above the caret in the dividend. For example,

2.1.3.3.2 Example # 3a&b

2.1.3.3.2.1

2.1.3.3.2.2

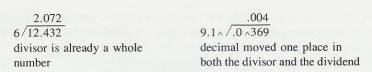

2.1.3.3.3 The newer method of teaching the division of

decimals is to multiply both the divisor and the dividend by the number that

will make both of them whole numbers. (Actually, this is the way the caret

method works also.) For example:

2.1.3.3.4 Example #4

2.1.3.3.4.1

2.1.3.3.5 Both of these methods work. Use the one you are more familiar with.

2.1.3.4

2.1.4 Fractions

2.1.4.1

In

general, there are two ways to deal with fractions

2.1.4.1.1 Convert the fraction to a decimal and

perform the operations on the decimals

2.1.4.1.2 Work directly with the fractions, using a set of rules for each operation. The rule for addition and subtraction is: convert the fractions to ones with common denominators, add or subtract the numerators, and place the result over the common denominator. The rule for multiplication is: multiply the numerators together to get the numerator of the answer, and multiply the denominators together for the denominator of the answer. The rule for division is: invert the divisor and multiply the fractions.

2.1.4.1.3 For statistics problems, it is usually easier to convert the fractions to decimals and then work with the decimals. Therefore, this is the method that we will illustrate. However, if you are a whiz at working directly with fractions, by all means continue with your method. To convert a fraction to a decimal, divide the lower number into the upper one. Thus, 3/4 = .75, and 13/17 = .765

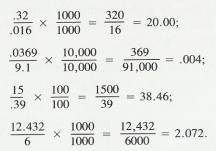

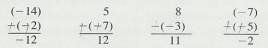

2.1.4.1.4 Examples Fractions

2.1.4.1.4.1

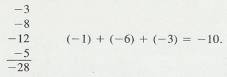

2.1.5 Negative Numbers

2.1.5.1

Addition

of Negative numbers

2.1.5.1.1 Any number without a sign is understood to

be positive

2.1.5.1.2 To add a series of negative numbers, add

the numbers in the usual way, and attach a negative sign to the total

2.1.5.1.3 Example #1

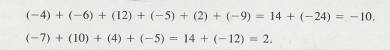

2.1.5.1.3.1

2.1.5.1.4 To add two numbers, one positive and one negative,

subtract the smaller number from the larger and attach the sign of the larger

to the result

2.1.5.1.5 Example #2

2.1.5.1.5.1

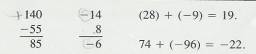

2.1.5.1.6 To add a series of numbers, of which some

are positive and some negative, add all the positive numbers together, all the

negative numbers together (see above) and then combine the two sums (see above)

2.1.5.1.7 Example #3

2.1.5.1.7.1

2.1.5.2

Subtraction

of Negative Numbers

2.1.5.2.1 To subtract a negative number, change it to

positive and add it. Thus

2.1.5.2.2 Example #4

2.1.5.2.2.1

2.1.5.3

Multiplication

of Negative Numbers

2.1.5.3.1 When the two numbers to be multiplied are

both negative, the product is positive

2.1.5.3.2 (-3)(-3)=9 (-6)(-8)=48

2.1.5.3.3 When one of the number is negative and the

other is positive, the product is negative

2.1.5.3.4 (-8)(3)=-24 14 X –2= -28

2.1.5.4

Division

of Negative Numbers

2.1.5.4.1 The rule in division is the same as the

rule in multiplication. If the two numbers are both negative, the quotient is

positive

2.1.5.4.2 (-10)  (-2)=-5 (-4)

(-2)=-5 (-4)  (-20)= .20

(-20)= .20

2.1.5.4.3 If one number is negative and the other

positive, the quotient is negative

2.1.5.4.4 (-10)  2= -5 6

2= -5 6  (-18)= -.33

(-18)= -.33

2.1.5.4.5 14  (-7)= -2 (-12)

(-7)= -2 (-12)  3= -4

3= -4

2.1.6 Proportions and Percents

2.1.6.1 A proportion is a part of a whole and can be expressed as a fraction or as a decimal. Usually, proportions are expressed as decimals. If eight students in a class of 44 received A's, we may express 8 as a proportion of the whole (44). Thus, 8/44, or .18. The proportion that received A's is .18.

2.1.6.2 To convert a proportion to a percent (per one hundred), multiply by 100. Thus: .18 x 100 = 18; 18 percent of the students received A's. As You can see proportions and percents are two ways to express the same idea.

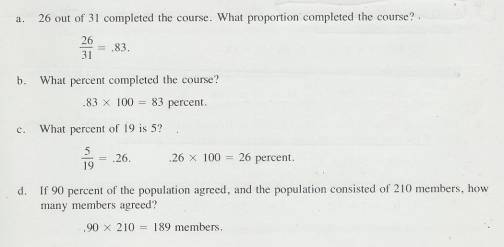

2.1.6.3

If

you know a proportion (or percent) and the size of the original whole, you can

find the number that the proportion represents. If .28 of the students were

absent due to illness,

and there are 50 students in all, then. 28 of the 50 were absent. (.28)(50) = 14 students who were absent. Here are some

more examples.

2.1.6.4

Example

Proportions and Percents

2.1.6.4.1

2.1.7 Absolute Value

2.1.7.1 The absolute value of a number ignores the sign of the number. Thus, the absolute value of -6 is 6. This is expressed with symbols as |-6| = 6. It is expressed verbally as "the absolute value of negative six is six. " In a similar way, the absolute value of 4 - 7 is 3. That is, |4 – 7| = | - 3| = 3.

2.1.8  Problems

Problems

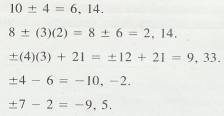

2.1.8.1

A  sign ("plus or minus"

sign) means to both add and subtract. A

sign ("plus or minus"

sign) means to both add and subtract. A  problem always has two answers.

problem always has two answers.

2.1.8.2

Example

Plus-Minus Problems

2.1.8.2.1

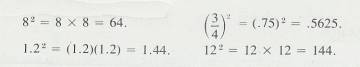

2.1.9 Exponents

2.1.9.1 .In the expression 52, 2 is the exponent. The 2 means that 5 is to be multiplied by itself. Thus, 52 = 5 x 5 = 25.

2.1.9.2

In

elementary statistics, the only exponent used is 2, but it will be used

frequently. When a number has an exponent of 2, the number is said to be

squared. The expression 42 (pronounced "four squared")

means 4 x4, and the product is 16. The squares of whole numbers between 1 and

1000 can be found in Tables in the Appendix of most stats text books.

2.1.9.3

Example

Exponents

2.1.9.3.1

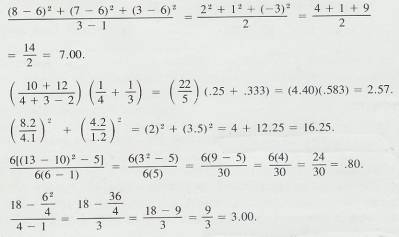

2.1.10

Complex

Expressions

2.1.10.1

Two

rules will suffice for the kinds of complex expressions encountered in

statistics.

2.1.10.1.1

Perform the

operations within the parentheses first. If there are brackets in the

expression, perform the operations within the parentheses and then the

operations within the brackets.

2.1.10.1.2

Perform the

operations in the numerator and those in the denominator separately, and

finally, carry out the division.

2.1.10.1.3

Example 5

2.1.10.1.3.1

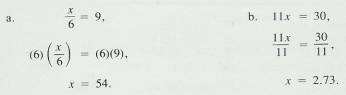

2.1.11

Simple

Algebra

2.1.11.1

To

solve a simple algebra problem, isolate the unknown (x) on one side of the

equal sign and combine the numbers on the other side. To do this, remember that

you can multiply or divide both sides of the equation by the same number without

affecting the value of the unknown. For example,

2.1.11.2

Example

6 a & b

2.1.11.2.1

2.1.11.2.2

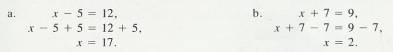

2.1.11.3

In

a similar way, the same number can be added to or subtracted from both sides of

the equation without affecting the value of the unknown.

2.1.11.4

Example

7

2.1.11.4.1

2.1.11.5

We

will combine some of these steps in the problems we will work for you. Be sure

you see shat operation is being performed on both sides in each step

2.1.11.6

Example

8

2.1.11.6.1

2.2 Rules, Symbols, and Shortcuts

2.2.1 Rounding Numbers

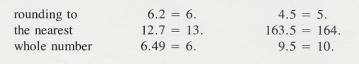

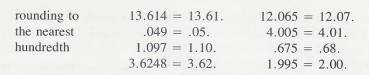

2.2.1.1

There

are two parts to the rule for rounding a number. If the digit that is to be

dropped is less than 5, simply drop it. If the digit to be dropped is 5 or

greater, increase the number to the left of it by one. These are the rules

built into most electronic calculators. These two rules are illustrated below

2.2.1.2

Example

9 a & B

2.2.1.2.1

2.2.1.2.2

2.2.1.3 A reasonable question is "How many decimal places should an answer in statistics have?" A good rule of thumb in statistics is to carry all operations to three decimal places and then, for the final answer, round back to two decimal places.

2.2.1.4

Sometimes this rule of thumb could get

you into trouble, though. For example, if half way through some work you had a

division problem of .0016  .0074, and if you

dutifully rounded those four decimals to three (.002

.0074, and if you

dutifully rounded those four decimals to three (.002  .007), you would get

an answer of .2857, which becomes .29. However, division without rounding gives

you an answer of .2162 or .22. The difference between .22 and .29 may be quite

substantial. We will often give you cues if more than two decimal places are

necessary but you will always need to be alert to the problems of rounding.

.007), you would get

an answer of .2857, which becomes .29. However, division without rounding gives

you an answer of .2162 or .22. The difference between .22 and .29 may be quite

substantial. We will often give you cues if more than two decimal places are

necessary but you will always need to be alert to the problems of rounding.

2.2.2 Square Roots

2.2.2.1 Statistics problems often require that a square root be found. Three possible solutions to this problem are

2.2.2.1.1 A calculator with a square-root key

2.2.2.1.2 The paper-and-pencil method

2.2.2.1.3 Use a Table the back of a statistics book.

2.2.2.2

Of

the three, a calculator provides the quickest and simplest way to find a square

root. If you have a calculator, you're set. The paper -and-pencil method is

tedious and error prone, so we will not discuss it. We'll describe

the use of Tables and we recommend that you use it if you don't have access to

a calculator.

2.2.2.3

Three

Digit Numbers

2.2.2.3.1

If you need the square root of a three-digit number (000 to

999), a table will give it to you directly. Simply look in the left-hand column

for the number and ad the square root in the third column, under  . For example;

the square root of 225 is 15.00, and

. For example;

the square root of 225 is 15.00, and  = 8.37. Square roots

are usually carried (or rounded) to two decimal places.

= 8.37. Square roots

are usually carried (or rounded) to two decimal places.

2.2.2.4

Numbers

between 0 and 10

2.2.2.4.1

For numbers between 0 and 10 that have two decimal places

(.01 to 9.99), The tables will give you the square root. Find your number in the left-hand column by

thinking of its decimal point as two places to the right. Find the square root

in the  column by moving the

decimal point one place to the left. For example,

column by moving the

decimal point one place to the left. For example,  = 1.50. Be sure you understand how these square roots were

found:

= 1.50. Be sure you understand how these square roots were

found:  = 2.52,

= 2.52,  = .66, and

= .66, and  = .28.

= .28.

2.2.2.5

Numbers

between 10 and

1000 That Have Decimals

2.2.2.5.1 For

numbers between 10 and 1000 with decimals interpolation is necessary. To

interpolate a value for  , find a value that is half way (.5 of the distance) between

, find a value that is half way (.5 of the distance) between  and

and  . Thus, the square root of 22.5 will be

(approximately) half way between 4.69 and 4.80, which is 4.74. For a second

example, we will find

. Thus, the square root of 22.5 will be

(approximately) half way between 4.69 and 4.80, which is 4.74. For a second

example, we will find  .

.  = 9.17, and

= 9.17, and  =9.22.

=9.22.  will be .35 into the interval between

will be .35 into the interval between  and

and

. That interval is .05 (9.22 - 9.17). Thus' (.35)(.05) = .02, and

. That interval is .05 (9.22 - 9.17). Thus' (.35)(.05) = .02, and  = 9.17 + .02 = 9.19. Interpolation is also necessary with numbers between 100 and 1000 that have decimals;

these can usually be estimated rather quickly because the difference between

the square roots of the whole numbers is so small. Look at the difference

between

= 9.17 + .02 = 9.19. Interpolation is also necessary with numbers between 100 and 1000 that have decimals;

these can usually be estimated rather quickly because the difference between

the square roots of the whole numbers is so small. Look at the difference

between  and

and  , for example.

, for example.

2.2.2.6 Numbers Larger Than 1000

2.2.2.6.1

For numbers larger than 1000, the square root can be estimated fairly closely by using the

second column in Table A (N 2). Find the large number under N2,

and read the square root from the N column. For example,  = 123, and

= 123, and  =34. Most large

numbers you encounter will not be found in the N2 column, and you

will just have to estimate the square root as closely as possible.

=34. Most large

numbers you encounter will not be found in the N2 column, and you

will just have to estimate the square root as closely as possible.

2.2.3 Reciprocals

2.2.3.1 This section is about a professional shortcut. This shortcut is efficient if multiplication is easier for you than division. If you prefer to divide rather than multiply, skip this section.

2.2.3.2

A

reciprocal of a number (N) is 1/N. Multiplying a number by 1/N

is equivalent to dividing it by N. For example, 8 2 = 8 x (1/2) = 8 x .5 = 4.0; 25 -7- 5 = 25 x (1/5) = 25 x .20 = 5.0. These examples are

easy, but we can also illustrate with more difficult

problems; 541

2 = 8 x (1/2) = 8 x .5 = 4.0; 25 -7- 5 = 25 x (1/5) = 25 x .20 = 5.0. These examples are

easy, but we can also illustrate with more difficult

problems; 541 98 =

541 x (1/98) = 541 x

.0102 = 5.52. So far, this should be clear, but there

should be one nagging question. How did we know that 1/98 = .01O2? The answer

is the versatile Table A. Table A contains a column 1/N, and, by looking

up 98, you will find that 1/98 = .0102.

98 =

541 x (1/98) = 541 x

.0102 = 5.52. So far, this should be clear, but there

should be one nagging question. How did we know that 1/98 = .01O2? The answer

is the versatile Table A. Table A contains a column 1/N, and, by looking

up 98, you will find that 1/98 = .0102.

2.2.3.3 If you must do many division problems on paper, we recommend reciprocals to you. If you have access to an electronic calculator, on the other hand, you won't need the reciprocals in Table A.

2.2.4 Estimating Answers

2.2.4.1

Just

looking at a problem and making an estimate of the answer before you do any

calculating is a very good idea. This is referred to as eyeballing the data and

Edward Minium (1978) has captured its importance with Minium's First Law of

Statistics: "The eyeball is the statistician's most powerful

instrument."

2.2.4.2

Estimating answers should keep you

from making gross errors, such as misplacing a decimal point. For example,

31.5/5 can be estimated as a little more than 6 If

you make this estimate before you divide, you are likely to recognize that an

answer of 63.or .63 is incorrect.

2.2.4.3

The

estimated answer to the problem (21)(108) is 2000, since (20)(100) = 2000.

2.2.4.4

The

problem (.47)(.20) suggests an estimated answer of .10, since (1/2)(.20) = .10.

With .10 in mind, you are not likely to write.94 for the answer, which is .094.

Estimating answers is also important if you are finding a square root. You can

estimate that  is about

10, since

is about

10, since  = 10;

= 10;  is about 1.

is about 1.

2.2.4.5

To

calculate a mean, eyeball the numbers and estimate the mean. If you estimate a

mean of 30 for a group of numbers that are primarily in the 20s, 30s, and 40s,

a calculated mean of 60 should arouse your suspicion that you have made an

error.

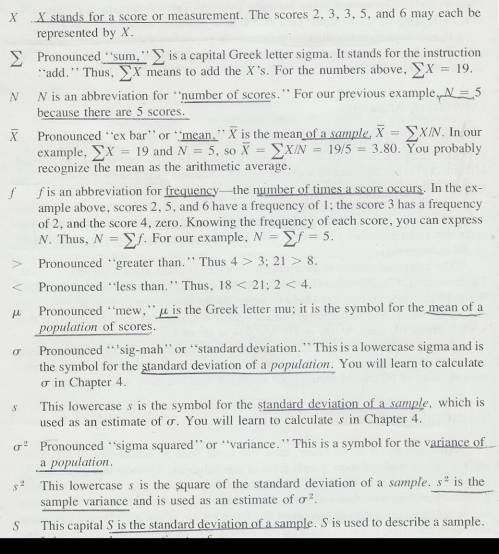

2.2.5 Statistical Symbols

2.2.5.1

Although as far as we know,

there has never been a clinical case of neoiconophobia (An extreme and unreasonable fear of

new symbols) some

students show a mild form of this behavior. Symbols like  ,

,  ,

and

,

and  may cause a

grimace, a frown, or a droopy eyelid. In more severe cases, the behavior

involves avoiding a statistics course entirely. We're rather sure that you

don't have such a severe case, since you have read this far. Even so, if you

are a typical beginning student in statistics, symbols like (

may cause a

grimace, a frown, or a droopy eyelid. In more severe cases, the behavior

involves avoiding a statistics course entirely. We're rather sure that you

don't have such a severe case, since you have read this far. Even so, if you

are a typical beginning student in statistics, symbols like ( ,

,  ,

,

and

and  are not very meaningful to you, and they may

even elicit feelings, of uneasiness. We also know from our teaching experience

that, by the end of the course, you will know what these symbols mean and be

able to approach them with an unruffled psyche-and perhaps even approach them

joyously. This section should help you over that initial, mild neoiconophobia,

if you suffer from it at all.

are not very meaningful to you, and they may

even elicit feelings, of uneasiness. We also know from our teaching experience

that, by the end of the course, you will know what these symbols mean and be

able to approach them with an unruffled psyche-and perhaps even approach them

joyously. This section should help you over that initial, mild neoiconophobia,

if you suffer from it at all.

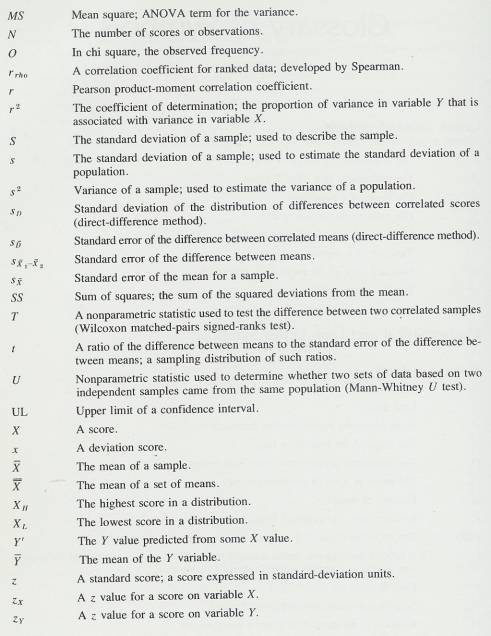

2.2.5.2

Below are definitions and

pronunciations of the symbols used in the next two chapters. Additional symbols will be defined

as they occur. Study this list until you know it.

2.2.5.3

Symbols

2.2.5.3.1

2.2.5.4 Pay careful attention to symbols. They serve as shorthand notations for the ideas and concepts you are learning. So, each time a new symbol is introduced, concentrate on it-learn it-memorize its definition and pronunciation. The more meaning a symbol has for you, the better you understand the concepts it represents and, of course, the easier the course will be.

2.2.5.5

Sometimes

we will need to distinguish between two different ( 's or two X's. We will use subscripts, and the

results will look like

's or two X's. We will use subscripts, and the

results will look like  1 and

1 and  2, or X1 and X2. Later, we will use subscripts other than

numbers to identify a symbol. You will see

2, or X1 and X2. Later, we will use subscripts other than

numbers to identify a symbol. You will see  x and erg. The point to learn here is

that subscripts are for identification purposes only; they never indicate

multiplication.

x and erg. The point to learn here is

that subscripts are for identification purposes only; they never indicate

multiplication.

does

not mean (

does

not mean ( )(

)( ).

).

2.2.5.6

Two

additional comments-to encourage and to caution you. We encourage you to do

more in this course than just read the text, work the problems, and pass the

tests, however exciting that may be. We encourage you to occasionally get

beyond this elementary text and read journal articles or short portions of

other statistics textbooks. We will indicate our recommendations with footnotes

at appropriate places. The word of caution that goes with this encouragement is

that reading statistics texts is like reading a Russian novel-the same

characters have different names in different places. For example, the mean of a

sample in some texts is symbolized M rather than  , and, in some texts, S.D.,

, and, in some texts, S.D.,  and

and  are used as

symbols for the standard deviation. If you expect such differences, it will be

less difficult for you to make the necessary translations.

are used as

symbols for the standard deviation. If you expect such differences, it will be

less difficult for you to make the necessary translations.

3 Central Values and the Organization of Data

3.1 Summary

3.1.1 A typical or representative

score from the sample population is a measure of central tendency.

3.1.2 Mode (Mo)

3.1.2.1

The

most frequently occurring score in the distribution.

3.1.2.2

Extreme

scores in the distribution do not affect the mode.

3.1.3 Median (Md)

3.1.3.1

This

score cuts the distribution of scores in half. That is half the scores in the

distribution fall above the middle score and half fall below the middle

score. The steps involved in computing

the median are

3.1.3.1.1 Rank the scores from lowest to highest

3.1.3.1.2 In the case of an odd number of scores pick

the middle score that divides the scores so that an equal number of scores are

above that score and an equal number are below that score. Example

3.1.3.1.2.1 2 5 7 8 12 14 18= 8 would be the median in

the aforementioned distribution of scores.

3.1.3.1.3 In the case of an even number of scores

pick the two middle scores which divide the scores so that an equal number of

scores are above those scores and an equal number of scores are below those

scores. Then add the two middle scores and divide the product by two. Example

3.1.3.1.3.1 2 5 7 8 12 14 18 20=8+12=20/2=10 would be

the median in the aforementioned distribution.

3.1.3.2

Extreme

scores in the distribution do not affect the median.

3.1.4 Mean  (Average)

(Average)

3.1.4.1

The

mean is the sum of scores divided by the number of scores.

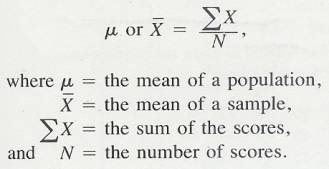

3.1.4.1.1 Formula

3.1.4.1.1.1

3.1.4.1.1.2 In the above formula X= the sum of the

scores and N= the number of scores.

3.1.4.2

Extreme

scores in the distribution will affect the mean.

3.1.4.3

The

term average is often used to describe the mean and is usually accurate.

Sometimes however the word average is used to describe other measures of

central tendency such as mode and median.

3.2 Introduction

3.2.1

Now that the preliminaries are out of

the way, you are ready to start on the basics of descriptive statistics. The

starting point is an unorganized group of scores or measures, all obtained from

the same test or procedure. In an experiment, the scores are measurements on

the dependent variable. Measures of central value (often called measures of

central tendency) give you one score or measure that represents or is typical

of, the entire group You will recall that in Chapter 1 we discussed the mean

(arithmetic average). This is one of the three central value statistics.

Recall from Chapter 1 that for every statistic there is also a parameter.

Statistics are characteristics of samples and parameters are characteristics of

population. Fortunately, in the case of the mean, the calculation of the parameter is identical to

the calculation of the statistic. This is not true for the standard deviation. (Chapter 4) Throughout this

book, we will refer to the sample mean (a statistic) with the symbol  pronounced

"ex-bar"-and to the population mean a parameter with the symbol

pronounced

"ex-bar"-and to the population mean a parameter with the symbol

pronounced

"mew."

pronounced

"mew."

3.2.2

However,

a mean based on a population is interpreted differently from a mean based on a sample. For a

population, there is only

one mean,  . Any sample, however, is only

one of many possible samples, and

. Any sample, however, is only

one of many possible samples, and  will vary from sample to

sample. A population mean is obviously better than a sample mean, but often it

is impossible to measure the entire population. Most of the time, then, we must resort to a sample and use

will vary from sample to

sample. A population mean is obviously better than a sample mean, but often it

is impossible to measure the entire population. Most of the time, then, we must resort to a sample and use  as an estimate of

as an estimate of  .

.

3.2.3 In this chapter you will learn to

3.2.3.1 Organize data gathered on a dependent measure,

3.2.3.2 Calculate central values from the organized data and determine whether they are statistics or parameters, and

3.2.3.3 Present the data graphically.

3.3 Finding the mean of Unorganized Data

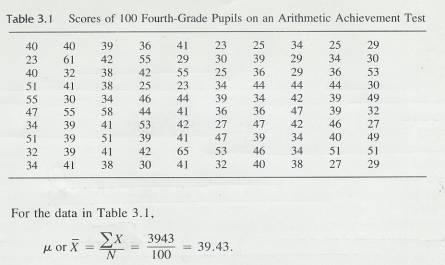

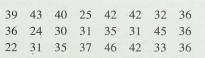

3.3.1 Table 3.1 presents the scores of 100 fourth-grade students on an arithmetic achievement test. These scores were taken from an alphabetical list of the students' names; therefore, the scores themselves are in no meaningful order. You probably already know how to compute the mean of this set of scores. To find the mean, add the scores and divide that sum by the number of scores.

3.3.2 Formula Mean

3.3.2.1

3.3.3 Table 3.1

3.3.3.1

3.3.4

If these 100 scores are a population,

then 39.43 would be a  , but if the 100 scores are a sample from some larger

population, 39.43 would be the sample mean,

, but if the 100 scores are a sample from some larger

population, 39.43 would be the sample mean,  .

.

3.3.5 This mean provides a valuable bit of information. Since a score of 40 on this test is considered average (according to the test manual that accompanies it), this group of youngsters, whose mean score is 39.43, is about average in arithmetic achievement.

3.4 Arranging Scores in Descending

Order and Finding the Median

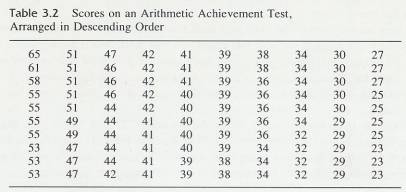

3.4.1 Look again at Table 3.1. If you knew that a score of 40 were considered average, could you tell just by looking that this group is about average? Probably not. Often, in research, so many measurements are made on so many subjects that just looking at all those numbers is a mind-boggling experience. Although you can do many computations using unorganized data, it is often very helpful to organize the numbers in some way. Meaningful organization will permit you to get some general impressions about characteristics of the scores by simply' 'eyeballing" the data (looking at it carefully). In addition, organization is almost a necessity for finding a second central value-the median.

3.4.2 One way of making some order out of the chaos in Table 3.1 is to rearrange the numbers into a list, from highest to lowest. Table 3.2 presents this rearrangement of the arithmetic achievement scores. (It is usual in statistical tables to put the high numbers at the top and the low numbers at the bottom.) Compare the unorganized data of Table 3.1 with the rearranged data of Table 3.2. The ordering from high to low permits you to quickly gain some insights that would have been very difficult to glean from the unorganized data. For example, by simply looking at the center of the table, you get an idea of what the central value is. The highest and lowest scores are readily apparent and you get the impression that there are large differences in the achievement levels of these children. You can see that some scores (such as 44) were achieved by several people and that some (such as 33) were not achieved by anyone. All this information is gleaned simply by quickly eyeballing the rearranged data.

3.4.3 Table 3.2

3.4.3.1

3.4.4 Error Detection

3.4.4.1 Eyeballing data is a valuable means of avoiding large errors. If the answers you calculate differ from what you expect on the basis of eyeballing, wisdom dictates that you try to reconcile the difference. You have either overlooked something when eyeballing or made a mistake in your computations.

3.4.5 This simple rearrangement of data also permits you to find easily another central value statistic, which can be found only with extreme difficulty from Table 3.1. This statistic is called the median. The median is defined as the point (Note that the median, like the mean, is a point and not necessarily an actual score.) on the scale of scores above, which half the scores fall and below which half the scores fall. That is, half of the scores are larger than the median, and half are smaller. Like the mean, the sample median is calculated exactly the same as the population median. Only the interpretations differ.

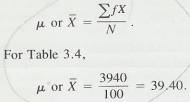

3.4.6 In Table 3.2 there are 100 scores; therefore, the median will be a point above which there are 50 scores and below which there are 50 scores. This point is somewhere among the scores of 39. Remember from Chapter 1, that any number actually stands for a range of numbers that has a lower and upper limit. This number, 39, has a lower limit of 38.5 and an upper limit of 39.5. To find the exact median somewhere within the range of 38.5-39.5 you use a procedure called interpolation. We will give you the procedure and the reasoning that goes with it at the same time. Study it until you understand it. It will come up again.

3.4.7 There are 42 scores below 39. You will need eight more (50 - 42 = 8) scores to reach the median. Since there are ten scores of 39, you need 8/10 of them to reach the median. Assume that those ten scores of 39 are distributed evenly throughout the interval of 38.5 and 39.5 and that, therefore, the median is 8/10 of the way through the interval. Adding. 8 to the lower limit of the interval, 38.5, gives you 39,3, which is the median for these scores.

3.4.8 There are occasions when you will need the median of a small number of scores. In such cases, the method we have just given you will work, but it usually is not necessary to go through that whole procedure. For example, if N is an odd number and the middle score has a frequency of 1, then it is the median. In the five scores 2, 3, 4, 12, 15, the median is 4. If there had been more than one 4, interpolation would have to be used.

3.4.9 When N is an even number, as in the six scores 2, 3, 4, 5, 12, 15, the point dividing the scores into two equal halves will lie halfway between 4 and 5. The median, then, is 4.5. If there had been more than one 4 or 5, interpolation would have to be used. Sometimes the distance between the two middle numbers will be larger, as in the scores 2, 3, 7, 11. The same principle holds: the median is halfway between 3 and 7. One-way of finding that point is to take the mean of the two numbers: (3 + 7) / 2 = 5, which is the median.

3.4.10 There is no accepted symbol to differentiate the median of a population from the median of a sample. When we need to make this distinction, we do it with words.

3.5 The Simple Frequency

Distribution

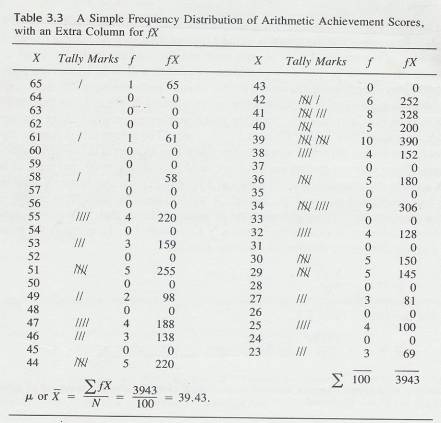

3.5.1 A more common (and often more useful) method of organizing data is to construct a simple frequency distribution. Table 3.3 is a simple frequency distribution for the arithmetic achievement data in Table 3.1.

3.5.2 The most efficient way to reduce unorganized data like Table 3.1 into a simple frequency distribution like Table 3.3 is to follow these steps:

3.5.2.1 Find the highest and lowest scores. In Table 3.1, the highest score is 65 and the lowest score is 23.

3.5.2.2

In column form, write down in

descending order all possible scores between the highest score (65) and the

lowest score (23). Head this column with the letter X.

3.5.2.3

Start

with the number in the upper-left-hand comer of the unorganized scores (a score

of 40 in Table 3.1),

draw a line through it, and place a tally

mark beside 40 in your frequency distribution.

3.5.2.4

Continue

this process through all the scores.

3.5.2.5

Count

the number of tallies by each score and place that number beside the tallies in

the column headed ƒ. Add up the

numbers in the ƒ column to be sure they equal N You

have now constructed a simple frequency distribution.

3.5.2.6 0ften, when simple frequency distributions are presented formally, the tally marks and all scores with a frequency of zero are deleted.

3.5.3 Don't worry about the ƒ X column in Table 3.3 yet. It is not part of a simple frequency distribution, and we will discuss it in the next section.

3.5.4 Table 3.3

3.5.4.1

3.6 Finding Central Values of a

Simple Frequency Distribution

3.6.1 Mean

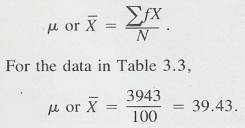

3.6.1.1

Computation of the mean from a simple

frequency distribution is illustrated in table 3.3. Remember that the numbers

in the ƒ column represent the number of people

making each of the scores. To get N, you must add the numbers in the f

column because that's where the people are represented. If you are a

devotee of shortcut arithmetic, you may already have discovered or may already

know the basic idea behind the procedure: multiplication is shortcut addition.

In Table 3.3, the column headed ƒ X means what it says algebraically: multiply f (the number of

people making a score) times X, (the score they made) for each of the scores.

The reason this is done. is that everyone who made a particular score must be

taken into account in the computation of the mean. Since only one person made a

score of 65, multiply 1 x 65, and put a 65 in the ƒ

X column. No one made a score of 64 and 0 x 64 = 0;

put a zero in the ƒ X column. Since

four people had scores of 55, multiply 4 x 55 to get 220. After ƒX is computed for all scores, obtain  ƒX by adding up the ƒX

column. Notice that

ƒX by adding up the ƒX

column. Notice that  ƒX in the simple frequency distribution is

exactly the same as

ƒX in the simple frequency distribution is

exactly the same as  :X in Table 3.1. To compute the mean from a simple frequency

distribution, use the formula

:X in Table 3.1. To compute the mean from a simple frequency

distribution, use the formula

3.6.1.2

Mean

Frequency distribution

3.6.1.2.1

3.6.2 Median

3.6.2.1 The procedure for finding the median of scores arranged in a simple frequency distribution is the same as that for scores arranged in descending order, except that you must now use the frequency column to find the number of people making each score

3.6.2.2 The median is still the point with half the scores above and half below it, and is the same point whether you start from the bottom of the distribution or from the top. If you start from the top of Table 3.3, you find that 48 people have scores of 40 or above. Two more are needed to get to 50, the halfway point in the distribution. There are ten scores of 39, and you need two of them. Thus 2/10 should be subtracted from 39.5 (the lower limit of the score of 40); 39.5 - .2 = 39.3.

3.6.2.3

Error

Detection

3.6.2.3.1 Calculating the median by starting from the

top of the distribution will produce the same answer as calculating it by

starting from the bottom.

3.6.3 Mode

3.6.3.1 You may also find the third central-value statistic from the simple frequency distribution. This statistic is called the mode. The mode is the score made by the greatest number of people-the score with the greatest frequency.

3.6.3.2 Distribution may have more than one mode. A bimodal distribution is one with two high frequency scores separated by one or more low frequency scores. However, although a distribution may have more than one mode, it can have only one mean and one median.

3.6.3.3 A sample mode and a population mode are determined in the same way.

3.6.3.4 In Table 3.3, more people had a score of 39 than any other score, so 39 is the mode. You will note, however, that it was close. Ten people scored 39, but nine scored 34 and eight scored 41. A few lucky guesses by children taking the achievement test could have caused significant changes in the mode. This instability of the mode limits its usefulness. .

3.7 The Grouped Frequency

Distribution

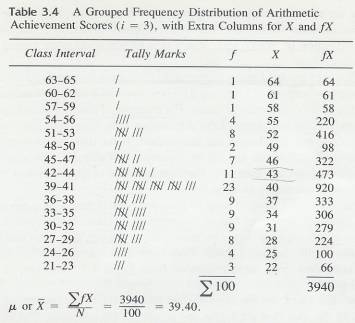

3.7.1 There is a way of condensing the data of Table 3.1 even further. The result of such a condensation is called a grouped frequency distribution and Table 3.4 is an example of such a distribution, again using the arithmetic achievement-test scores.5

3.7.2 A formal grouped frequency

distribution does not include the tally marks or the X and ƒX columns.

3.7.3 The grouping of data began as a-way of simplifying computations in the days before the invention of all these marvellous computational aids such as computers and calculators. Today, most researchers group their data only when they want to construct a graph or when N's are very large. These two occasions happen often enough to make it important for you to learn about it.

3.7.4 In the grouped frequency distribution, X values are grouped into

ranges called class

intervals. In Table 3.4, the entire range of

scores, from 65 to 23 has been reduced to 15 class intervals, each interval

covers three scores and, the size of the interval (the number of scores

covered) is indicated by i. For Table 3.4, i = 3. The midpoint of each interval

represents all scores in that interval for example, there were nine children who

had scores of 33, 34 or 35. The midpoint of the class interval 33-35 is 34. All

nine children are represented by 34. Obviously, this procedure may introduce

some inaccuracy into computations; however, the amount of error introduced is

usually very slight. For example, the mean computed from Table 3.4 is 39.40. The

mean computed from

ungrouped data is 39.43.

3.7.5 Class intervals have upper and lower limits, much like simple scores obtained by measuring a quantitative variable. A class interval of 33-35 has a lower limit of 32.5 and an upper limit of 35.5. Similarly, a class interval of 40-49 has a lower limit of 39.5 and an upper limit of 49.5.

3.7.6 Table 3.4

3.7.6.1

3.7.7 Establishing Class Intervals

3.7.7.1 There are three conventions that are usually followed in establishing class intervals. We call them conventions because they are customs rather than hard-and-fast rules. There are two justifications for these conventions. First, they allow you to get maximum information from your data with minimum effort. Second, they provide some standardization of procedures, which aids in communication among scientists. These conventions are

3.7.7.2

Data

should be grouped into not fewer than 10 and not more than 20 class

intervals.

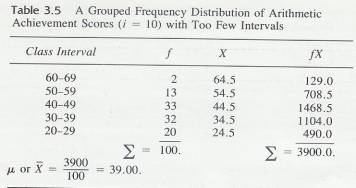

3.7.7.2.1 The primary purpose of grouping data is to provide a clearer picture of trends in the data and to make computations easier. (For example, Table 3.4 shows that there are normally frequencies near the center of the distribution with fewer and fewer as the upper and lower ends of the distribution are approached. If the data are grouped into fewer than 10 intervals, such trends are not as apparent. In Table 3.5, the same scores are grouped into only five class intervals. The concentration of frequencies in the center of the distribution is not nearly so apparent.

3.7.7.2.2 Another reason for using at least 10 class intervals is that, as you reduce the number of class intervals, the errors caused by grouping increase. With fewer than 10 class intervals, the errors may no longer be minor. For example, the mean computed from Table 3.4 was 39.40-only .03 points away from the exact mean of 39.43 computed from ungrouped data. The mean computed from Table 3.5, however, is 39.00-an error of .43 points.

3.7.7.2.3 On the other hand, the use of more than 20 class intervals may tend to exaggerate fluctuations in the data that are really due. to chance occurrences. . You also sacrifice much of the ease of computation, with little gain in control over errors. So, the convention is: use 10 to 20 class intervals.

3.7.7.3

The size of the class intervals (i)

should be an odd number or 10 or a multiple of 10. (Some writers include i= 2 as acceptable. Some

also object to the use of i= 7 or 9. In actual practice, the most frequently

seen i's are 3, 5, 10, and multiples of 10.)

3.7.7.3.1

The reason for this is simply computational ease. The

midpoint of the interval is used as representative of all scores in the

interval; and if i is an odd number,

the midpoint will be a whole number. If hs an even number, the midpoint will

be a decimal number. In the interval 12-14 (i = 3), the midpoint is the whole

number 13. In an interval 12 to 15 (i = 4), the midpoint is the decimal number

13.5. However, if the range of scores is so great that you cannot include all

of them in 20 groups with i = 9 or less, it is conventional to

place 10 scores or a multiple of 10 in each class interval.

3.7.7.4

Begin each class interval with

a multiple of .i.

3.7.7.4.1 For example, if the lowest score is 44 and i = 5, the first class interval should be 40-44 because 40 is a multiple of 5. This convention is violated fairly often. However, the practice is followed more often than not. A violation that seems to be justified occurs when i = 5. When the interval size is 5, it may be more convenient to begin the interval such that multiples of 5 will fall at the midpoint, since multiples of 5 are easier to manipulate. For example, an interval 23-27 has 25 as its midpoint, while an interval 25-29 has 27 as its midpoint. Multiplying by 25 is easier than multiplying by 27.

3.7.7.4.2 In addition to these three conventions, remember that the highest scores go at the top and the lowest scores at the bottom.

3.7.8 Converting Unorganized Data into

a Grouped Frequency Distribution

3.7.8.1 Now that you know the conventions for establishing class intervals, we will go through the steps for converting a mass of data like that in Table 3.1 into a grouped frequency distribution like Table 3.4:

3.7.8.2 Find the highest and lowest scores. In Table 3.1, the highest score is 65, and the lowest score is 23.

3.7.8.3 Find the range of scores by subtracting the lowest score from the highest and adding 1: 65 - 23 + I = 43. The 1 is added so that the upper limit of the highest score and the lower limit of the lowest score will be included.

3.7.8.4 Determine i by a trial-and-error procedure. Remember that there are to be 10 to 20 class intervals and that the interval size should be odd, 10, or a multiple of 10. Dividing the range by a potential i value tells the number of class intervals that will result. For example, dividing the range of 43 by 5 provides a quotient of 8.60. Thus, i = 5 produces 8.6 or 9 class intervals. That does not satisfy the rule calling for at least 10 intervals, but it is close and might be acceptable. In most such cases, however it is better to use a smaller I and get a larger number of intervals. Dividing the range by 3 (43/3) gives you 14.33 or 15 class intervals. It sometimes happens that this process results in an extra class interval. This occurs when the lowest score is such that extra scores must be added to the bottom of the distribution to start the interval with a multiple of i. For the data in Table 3.1, the most appropriate interval size is 3, resulting in 15 class intervals.

3.7.8.5 Begin the bottom interval with the lowest score. if it is a multiple of i. If the lowest score is not a multiple of i, begin the interval with the next lower number that is a multiple of i. In the data of Table 3.1, the lowest score, 23, is not a multiple of i. Begin the interval with 21. since it is a multiple of 3. The lowest class interval, then, is 21-23. From there on, it's easy. Simply' begin the next interval with the next number and end it such that it includes three numbers (24-26). Look at the class intervals in Table 3.4. Notice that each interval begins with a number evenly divisible by 3.

3.7.8.6

3.7.8.7

Table

3-5

3.7.8.7.1

3.7.8.8

The

rest of the process is the same as for a simple frequency distribution. For

each score in the unorganized data, put a tally mark beside its class interval

and cross out the score. Count the tally marks and put the number into the

frequency column. Add the frequency column to be sure that:  ƒ= N.

ƒ= N.

3.7.8.9

Clue

to the Future

3.7.8.9.1 The distributions that you have been

constructing are empirical distributions based on scores actually gathered in

experiments. This chapter and the next two are about these empirical frequency

distributions. Starting with Chapter 8, and throughout the rest of the book,

you will also make use of theoretical distributions-distributions based on

mathematical formulas and logic rather than on actual observations.

3.8 Finding Central Values of a

Grouped Frequency Distribution

3.8.1 Mean

3.8.1.1

The procedure for finding the mean of

a grouped frequency distribution is similar to that for the simple frequency

distribution. In the grouped distribution, however, the midpoint of each

interval represents all the scores in the interval. Look again at Table 3.4.

Notice the column headed with the letter X. The numbers in that column are the

midpoints of the intervals. Assume that the scores in the interval are evenly

distributed throughout the interval. Thus, X is the mean for all scores within

the interval. After the X column is filled, multiply each X by its ƒ value in order to include all frequencies in that interval. Place

the product in the ƒ X column.

Summing the ƒ X ..column

provides  ƒX, which, when

divided-by N, yields the mean. In terms of a formula,

ƒX, which, when

divided-by N, yields the mean. In terms of a formula,

3.8.1.2

Formula

3.8.1.2.1

3.8.2 Median

3.8.2.1 Finding the median of a grouped distribution requires interpolation within the interval containing the median. We will use the data in Table 3.4 to illustrate the procedure. Remember that the median is the point in the distribution that has half the frequencies above it and half the frequencies below it. Since N= 100, the median will have 50 frequencies above it and 50 below it. Adding frequencies from the bottom of the distribution, you find that there are 42 who scored below the interval 39-41. You need 8 more frequencies (50 - 42 = 8) to find the median. Since 23 people scored in the interval 39-41, you need 8 of these 23 frequencies or 8/23. Again, you assume that the 23 people in the interval are evenly distributed through the interval. Thus, you need the same proportion of score points in the interval as you have frequencies-that is, 8/23 or, 35 of the 3 score points in the interval. Since .35 x 3 = 1.05, you must go 1.05 score points into the interval to reach the median. Since the lower limit of the interval is 38.5, add 1.05 to find the median, which is 39.55. Figure 3.1 illustrates this procedure.

3.8.2.2 In summary, the steps for finding the median in a grouped frequency distribution are as follows.

3.8.2.3 Divide N by 2

3.8.2.4

Starting at the bottom of the

distribution, add the frequencies until you find the interval containing the

median

3.8.2.5

Subtract

from N/2 the total frequencies of all intervals below the interval

containing the median.

3.8.2.6

Divide

the difference found in step 3 by the number of frequencies in the interval

containing the median.

3.8.2.7

Multiply

the proportion found in step 4 by i

3.8.2.8

Add

the product found in step 5 to the lower limit of the interval containing the

median. That sum is the median.

3.8.2.9

Figure

3.1

3.8.2.9.1

3.8.3 Mode

3.8.3.1

The third central value, the mode, is

the midpoint of the interval having the greatest number of frequencies. In Table 3.4, the interval 39-41 has the greatest number of frequencies-23. The midpoint of

that interval, 40, is the mode.

3.9 Graphic Presentation of Data

3.9.1

In order to better communicate your

findings to colleagues (and to understand them better yourself), you will often

find it useful to present the results in the form of a graph. It has been said,

with considerable truth, that one picture is worth a thousand words; and a

graph is a type of picture. Almost any data can be presented graphically. The

major purpose of a graph is to get a clear, overall picture of the data.

3.9.2

Graphs are composed of a

horizontal axis (variously called the baseline, X axis or abscissa) and

a vertical axis called the Y-axis or ordinate. We will take

what seems to be the simplest course and use the terms X and Y.

3.9.3 We will describe two kinds of

graphs. The first kind is used to present frequency distributions like those

you have been constructing. Frequency polygons, Histograms, and bar graphs are

examples of this first kind of graph. The second kind we will describe is the

line graph, which is used to present the relationship

between two different variables.

3.9.4 Illustration XY Axis

3.9.4.1

3.9.5 Presenting Frequency

Distributions

3.9.5.1

Whether you use a frequency polygon, a

histogram, or a bar graph to present a frequency distribution depends on the

kind of variable you have measured. A frequency polygon or histogram is used

for quantitative data, and the bar graph is used for qualitative data. It is

not wrong to use a bar graph for quantitative data; but most researchers follow

the rule given above. Qualitative data, however, should not be presented

with a frequency polygon or a histogram. The arithmetic achievement scores

(Table 3.1) are an example of quantitative data.

3.9.5.2

Frequency

Polygon

3.9.5.2.1 Figure

3.2 shows a-frequency polygon based on the frequency distribution in Table 3.4.

We will use it to demonstrate the characteristics of all frequency polygons. On

the X-axis we placed the midpoints of the class intervals. Notice that the

midpoints are spaced at equal intervals, with the smallest midpoint at the left

and the largest midpoint at the right. The Y-axis

is labeled "Frequencies” and is also marked off into equal intervals.

3.9.5.2.2 Graphs are designed to "look right." They look right if the height of the figure is 60 percent to 75 percent of its length. Since the midpoints must be plotted along the X axis, you must divide the Y axis into units that will satisfy this rule. Usually, this requires a little juggling on your part. Darrell Huff (1954) offers an excellent demonstration of the misleading effects that occur when this convention is violated.

3.9.5.2.3 The

intersection of the X and Y axes is

considered the zero point for both variables. For the Y-axis in Figure 3.2, this is indeed the case. The distance on the Y axis is the same from zero to two as

from two to four, and so on. On the X axis, however, that is not the case.

Here, the scale jumps from zero to 19 and then is divided into equal units of

three. It is conventional to indicate a break in the measuring scale by

breaking the axis with slash marks between zero and the lowest score used, as

we did in Figure 3.2. It is also conventional to close a polygon at both ends by

connecting the curve to the X-axis.

3.9.5.2.4 Each point of the frequency polygon represents two numbers; the class midpoint directly below it on the X-axis and the frequency of that class directly across from it on the Y-axis. By looking at the points in Figure 3.2, you can readily see that three people are represented by the midpoint 22, nine people by each of the midpoints 31, 34, and 37, 23 people by the midpoint 40, and so on.

3.9.5.2.5 The

major purpose of the frequency polygon is to gain an overall view of the

distribution of scores. Figure 3.2 makes it clear, for example, that the

frequencies are greater for the lower scores than for the higher ones. It also

illustrates rather dramatically that the greatest number of children scored in

the center of the distribution.

3.9.5.3

Figure

3-2

3.9.5.3.1

3.9.5.4

Histogram

3.9.5.4.1 Figure 3.3 is a histogram constructed from the same data that were used for the frequency polygon of Figure 3.2. Researchers may choose either of these methods for a given distribution of quantitative data, but the frequency polygon is usually preferred for several reasons: it is easier to construct, gives a generally clearer picture of trends in the data, and can be used to compare different distribution, on the same graph. However frequencies are easier to read from a histogram.

3.9.5.4.2 Figure 3-3

3.9.5.4.2.1

3.9.5.4.3 Actually, the two figures are very

similar. They differ only in that the histogram is made by raising bars from

the X axis to the appropriate frequencies instead of plotting points above the

midpoints. The width of a bar is from the lower to the upper limit of its class

interval. Notice that there is no space between the bars.

3.9.5.5

Bar

Graph

3.9.5.5.1 The third type of graph that presents frequency distributions is the bar graph. A bar graph presents frequencies of the categories of a qualitative variable. An example of a qualitative variable is laundry detergent; the there are many different brands (types of the variable), but the brands don't tell you the order they go in, for example

3.9.5.5.2 With quantitative variables, the measurements

of the variable impose an order on themselves. Arithmetic achievement scores

of 43 and 51 tell you the order they belong in. "Tide" and

"Lux" do not signify any order.

3.9.5.5.3 Figure 3.4 is an example of a bar graph. Notice that each bar is separated by a small space. This bar graph was constructed by a grocery store manager who had a practical problem to solve. One side of an aisle in his store was stocked with laundry detergent, and he had no more space for this kind of product. How much of the available space should he allot for each brand? For one week, he kept a record of the number of boxes of each brand sold. From this frequency distribution of scores on a qualitative variable, he constructed the bar graph in Figure 3.4. (He, of course, used the names of the brands. We wouldn't dare!)

3.9.5.5.4 Brands

E, H, and K are obviously the big sellers and should get the greatest amount of

space. Brands A and D need very little space. The other brands fall between

these. The grocer, of course, would probably consider the relative profits from

the sale of the different brands in order to determine just how much space to

allot to each. Our purpose here is only to illustrate the use of the bar graph

to present qualitative data.

3.9.6 The Line Graph

3.9.6.1 Perhaps the most frequently used graph in scientific books and journal articles is the line graph. A line graph is used to present the relationship between two variables.

3.9.6.2 . A point on a line -graph represents the two scores made by' one person on each of the two variables. Often, the mean of a group is used rather than one person, but the idea is the same: a group with a mean score of X on one variable had a mean score of Y on the other variable. The point on the graph represents the means of that group on both variables.

3.9.6.3

Figure

3-4 & 3-5

3.9.6.3.1

3.9.6.4

Figure

3-6

3.9.6.4.1

3.9.6.5

Figure

3-7

3.9.6.5.1

3.9.6.6

Figure

3-8

3.9.6.6.1

3.9.6.7

Figure 3.5 is an example of a line

graph of the relationship between subjects scores on an anxiety test and their

scores on a difficult problem-solving task. Many studies have discovered this

general relationship. Notice that performance on the task is better and better

for subjects with higher and higher anxiety scores up to the middle range of

anxiety. But as anxiety scores continue to increase, performance scores decrease.

Chapter 5, "Correlation and Regression," will make extensive use of a

version of this type of line graph.

3.9.6.8 A variation of the line graph places performance scores on the Y-axis and some condition of training on the X-axis. Examples of such training conditions are: number of trials, hours of food deprivation, year in school, and: amount of reinforcement. The "score” on the training condition is assigned by the experimenter.

3.9.6.9 Figure 3.6 is a generalized learning curve with a performance measure (scores) on Y axis and number of reinforced trials on the .X axis. Early in training (after only one or two trials), performance is poor. As trials continue, performance improves rapidly at first and then more and more slowly. Finally, at the extreme right-hand portion of the graph, performance has levelled off; continued trials do not produce further changes in the scores.

3.9.6.10 A line graph, then, presents a picture of the relationship between two variables. By looking at the line, you can tell what changes take place in the Y variable as the value of the X variable changes.

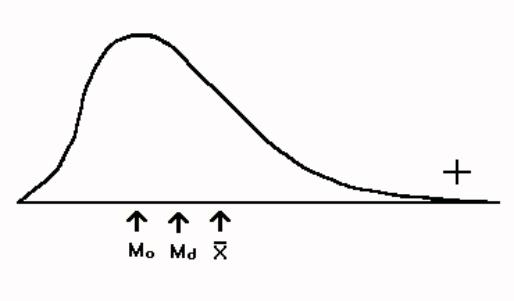

3.10 Skewed Distributions

3.10.1 Look back at Table 3.4 graphed as Figure 3.2. Notice that the largest frequencies are found in the middle of the distribution. The same thing is true in Problem 3 of this chapter. These distributions are not badly skewed; they are reasonably symmetrical ln some data, however, the largest frequencies are found at one end of the distribution rather than in the middle. Such distributions are said to he skewed.

3.10.2 The word skew is similar to the word skewer, the name of the cooking implement used !n making shish kebab. A skewer is long and pointed and is thicker at one end than the other (not symmetrical). Although skewed distributions do not function like skewers (you would have a terrible time poking one through a chunk of lamb), the, name does help you remember that a skewed distribution has a thin point on one side.

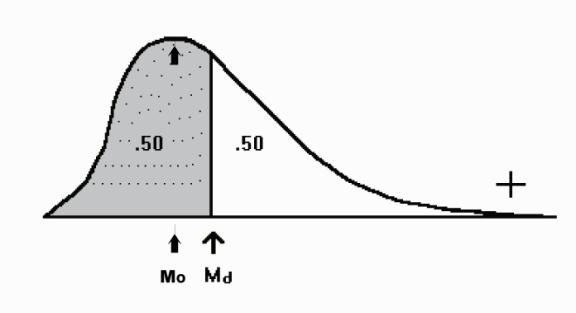

3.10.3

Figures 3.7 and 3.8 are illustrations

of skewed distributions. Figure 3.7 is positive skewed; the thin point is toward the high scores, and

the most frequent scores are low ones. Figure 3.8 is negatively skewed; the

thin point or skinny end is toward .the low scores and most frequent scores are

high ones.

3.10.4

There

is a mathematical of measuring the degree of skewness that is more precise

than eyeballing, but it is beyond the scope of this book. However, figuring the

relationship of the mean to the median is an objective way to determine the direction

of the skew. When the mean is numerically smaller than the median, there is some amount of negative

skew.

3.10.5

Figure

3-9

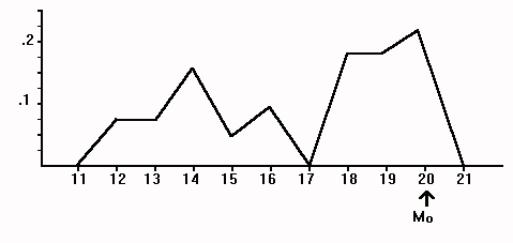

3.10.5.1

3.10.6 When the mean is larger than the median there is positive skew. The reason for this is that the mean is affected by the size of the numbers and is pulled in the direction of the extreme scores. The median is not influenced by the size of the scores. The relationship between the mean and the median is illustrated by Figure 3.9. The size of the difference between the mean and the median gives you an indication of how much the distribution is skewed.

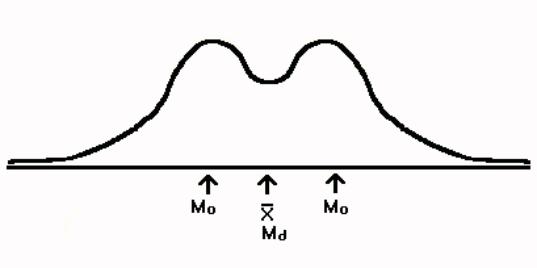

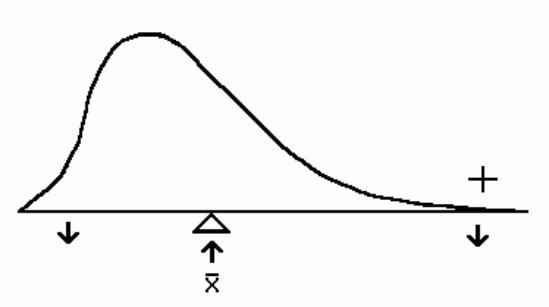

3.11 The Mean, Median, and Mode Compared

3.11.1

A common question is [Which measure of

central value should I use?" The general answer is "Given a choice,

use the mean. " Sometimes, however, the data give you no choice. For example if the frequency

distribution is for a nominal variable the mode is the

only appropriate measure of central value.

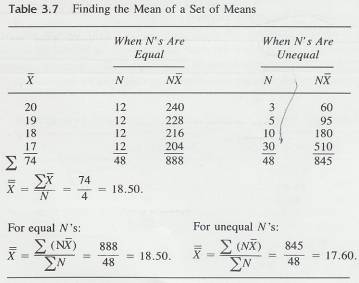

3.11.2 Figure 3-10

3.11.2.1

3.11.3 It is meaningless to find a median or to add up the scores and divide to find a mean for data based on a nominal scale. For the data from the voting-behavior experiment the mode is the only measure of central value that is meaningful. For a frequency distribution of an ordinal variable, the median or the mode is appropriate. For data based on interval or ratio data, the mean, median or mode may be used-you have a choice.

3.11.4 Even if you have interval or ratio data, there are two situations in which the mean is inappropriate because it gives an erroneous impression of the distribution. The first situation is the case of a severely skewed distribution. The following story demonstrates why the mean is inappropriate for severely skewed distributions.

3.11.5 The developer of Swampy Acres Retirement Home sites is attempting, with a computer-selected mailing list, to sell the lots in his southern paradise to northern buyers. The marks express concern that flooding might occur. The developer reassures them by explaining that the average elevation of his lots is 78.5 feet and that the water has never exceeded 25 feet in that area. On the average, he has told the truth; but this average truth is misleading. Look at the actual lay of the land in Figure 3.10 and examine the frequency distribution in Table 3.6, which summarizes the picture.

3.11.6 The mean elevation as the developer said is 78.5 feet; however, only 20 lots, all on a cliff, are out of the flood zone. The other 80 lots are, on the average, under water. The mean, in this case, is misleading. In this instance, the central value that describes the typical case is the median because it is unaffected by the size of the few extreme lots on the cliff. The median elevation is 12.5 feet, well below the high-water mark.

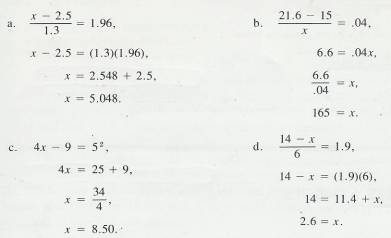

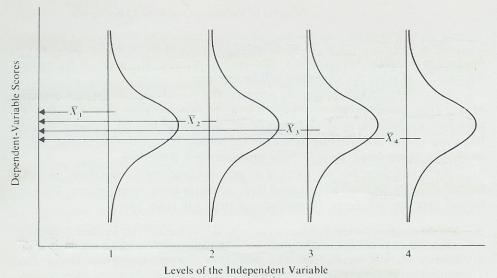

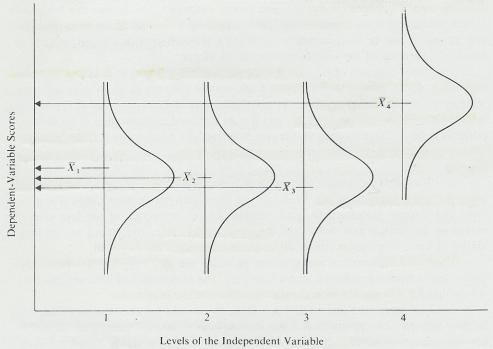

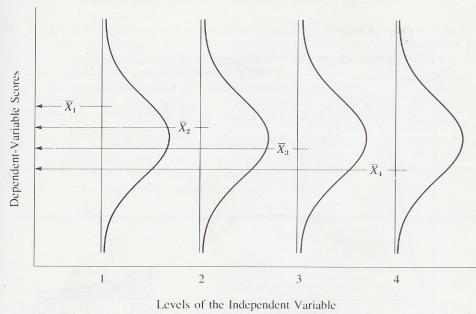

3.11.7 Darrell Huff's delightful and informative book, How to Lie with Statistics (1954) gives a number of such examples. We heartily recommend this book to you. It provides many cautions concerning misinformation conveyed through the use of the inappropriate statistic. A more recent and equally delightful book is Flaws and Fallacies in Statistical Thinking by Stephen Campbell (1974).